Abstract

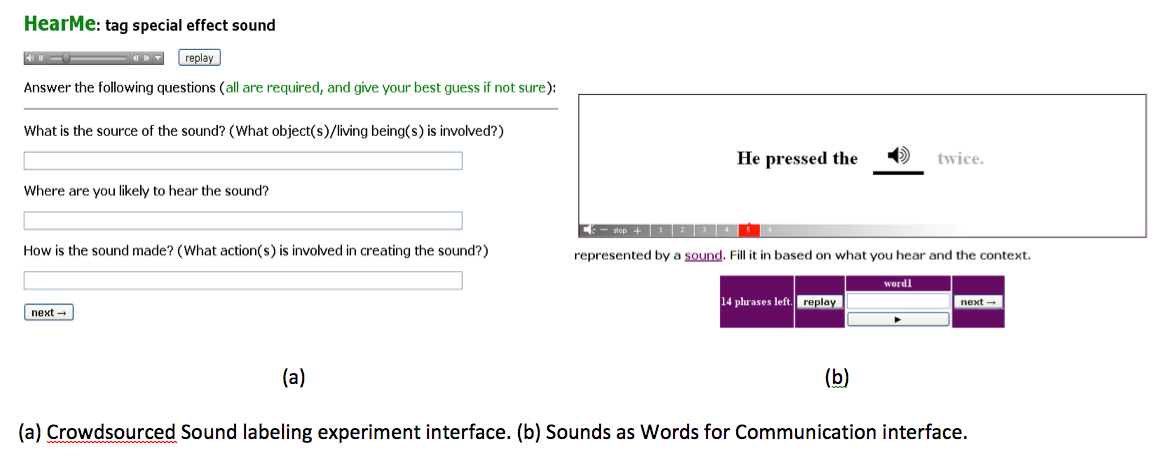

Auditory displays have been used in both human-machine and computer interfaces. However, the use of non-speech audio in assistive communication for people with language disabilities, or in other applications that employ visual representations, is still under-investigated. In this paper, we introduce SoundNet, a linguistic database that associates natural environmental sounds with words and concepts. A sound labeling study was carried out to verify SoundNet associations and to investigate how well the sounds evoke concepts. A second study was conducted using the verified SoundNet data to explore the power of environmental sounds to convey concepts in sentence contexts, compared with conventional icons and animations. Our results show that sounds can effectively illustrate (especially concrete) concepts and can be applied to assistive interfaces.

Materials

PDF |

Slides |

BibTeX